Creating Kafka Brokers on a single Node with KRaft mode

Apache Kafka is a popular distributed streaming platform that allows users to publish and subscribe to streams of records, similar to a message queue or enterprise messaging system. In this tutorial, we will be setting up three Kafka brokers on a single node using Kraft.

Prerequisites

Before we begin, ensure that you have the following prerequisites installed on your machine:

- Java 8 or later

- Apache Kafka 3.4 or later

Step 1: Download Kafka

sudo apt-get update -y &&

sudo apt-get install -y curl

sudo apt install default-jre -y

sudo mkdir ~/Downloads

sudo chmod -R 777 ~/Downloads

curl "https://downloads.apache.org/kafka/3.4.0/kafka_2.13-3.4.0.tgz" -o ~/Downloads/kafka.tgz

mkdir ~/kafka

sudo chmod -R 777 ~/kafka

tar -xvzf ~/Downloads/kafka.tgz -C ~/kafka --strip 1Step 2: Configure Kafka

Next, we need to configure Kafka to work with Kraft. Follow these steps:

- Create server1.properties, server2.properties, server3.properties file in the Kafka Kraft config directory using a text editor.

- Make change the following lines to the file:

server1

nano ~/kafka/config/kraft/server1.properties

node.id=1

controller.quorum.voters=1@localhost:19092,2@localhost:19093,3@localhost:19094

listeners=PLAINTEXT://:9092,CONTROLLER://:19092

advertised.listeners=PLAINTEXT://localhost:9092

log.dirs=/tmp/server1/kraft-combined-logsserver2

nano ~/kafka/config/kraft/server2.properties

node.id=2

controller.quorum.voters=1@localhost:19092,2@localhost:19093,3@localhost:19094

listeners=PLAINTEXT://:9093,CONTROLLER://:19093

advertised.listeners=PLAINTEXT://localhost:9093

log.dirs=/tmp/server2/kraft-combined-logsserver3

nano ~/kafka/config/kraft/server3.properties

node.id=3

controller.quorum.voters=1@localhost:19092,2@localhost:19093,3@localhost:19094

listeners=PLAINTEXT://:9094,CONTROLLER://:19094

advertised.listeners=PLAINTEXT://localhost:9094

log.dirs=/tmp/server3/kraft-combined-logsStep 3: Kafka cluster id creation and log directory setup

~/kafka/bin/kafka-storage.sh random-uuid

~/kafka/bin/kafka-storage.sh format -t <uuid> -c ~/kafka/config/kraft/server1.properties

~/kafka/bin/kafka-storage.sh format -t <uuid> -c ~/kafka/config/kraft/server2.properties

~/kafka/bin/kafka-storage.sh format -t <uuid> -c ~/kafka/config/kraft/server3.propertiesReplace uuid with a newly generated

Step 4: Starting the kafka servers

To start the Kafka brokers, run the following commands in separate terminal windows:

~/kafka/bin/kafka-server-start.sh -daemon ~/kafka/config/kraft/server1.properties

~/kafka/bin/kafka-server-start.sh -daemon ~/kafka/config/kraft/server2.properties

~/kafka/bin/kafka-server-start.sh -daemon ~/kafka/config/kraft/server3.propertiesStep 5: Create a kafka topic

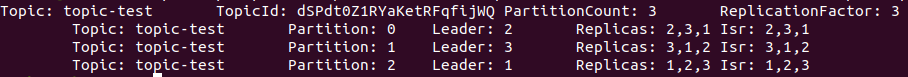

~/kafka/bin/kafka-topics.sh --create --topic topic-test --partitions 3 --replication-factor 3 --bootstrap-server localhost:9092List and describe topic

~/kafka/bin/kafka-topics.sh --bootstrap-server localhost:9092 --list

~/kafka/bin/kafka-topics.sh --bootstrap-server localhost:9092 --describe --topic topic-test

Step 6: Producer and Consumer

Run each command for each terminal

~/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic topic-test

~/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic topic-testEnter the message you need to send in the producer terminal, and the consumer terminal should receive the message.