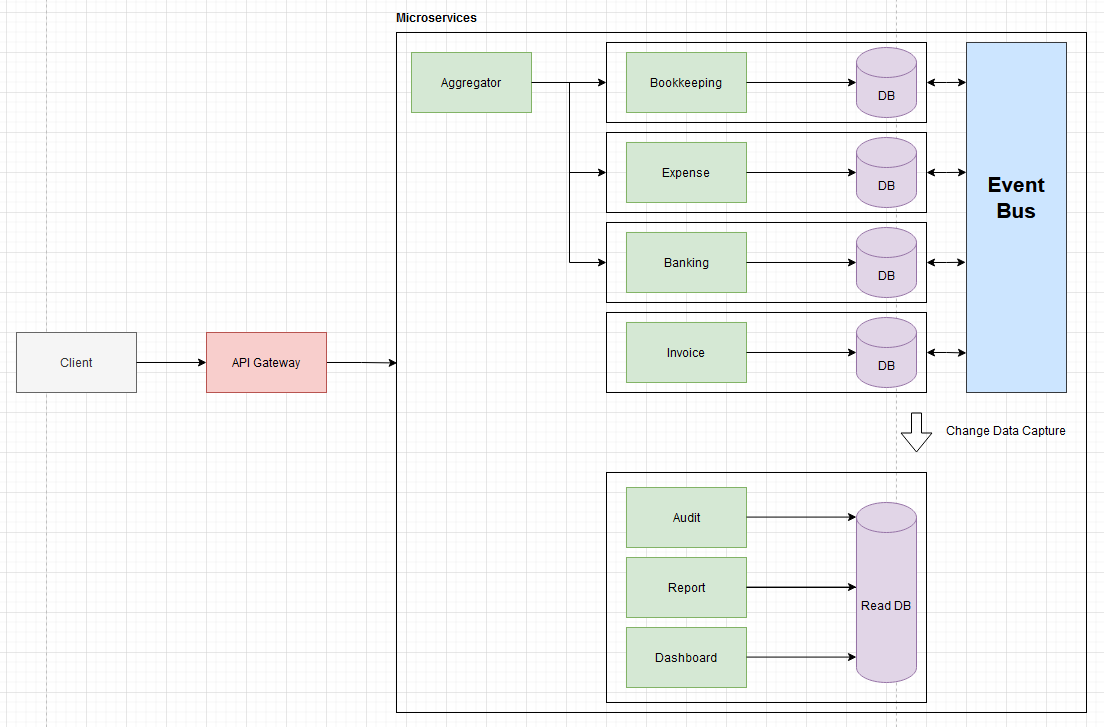

This post expands on my last one Learn Domain Driven Design by building an invoice app, in which I created a monolithic invoice app. I divided the app into multiple services since I want to learn about microservices.

When should we use microservices?

When I first learned about microservices, this thought crossed my mind. The issue with monoliths is that if not carefull as they grow more complicated over time, it becomes more difficult to maintain, scale, and add new features. Everyone steps on each other’s toes when the team size is larger. We could scale it and divide it using microservices, but these also have complexity. The issue of eventual consistency must be addressed.

Microservices, in my opinion, only make sense when teams are too big and it is expensive to scale. For as long as I could, I would stay with a monnolith.

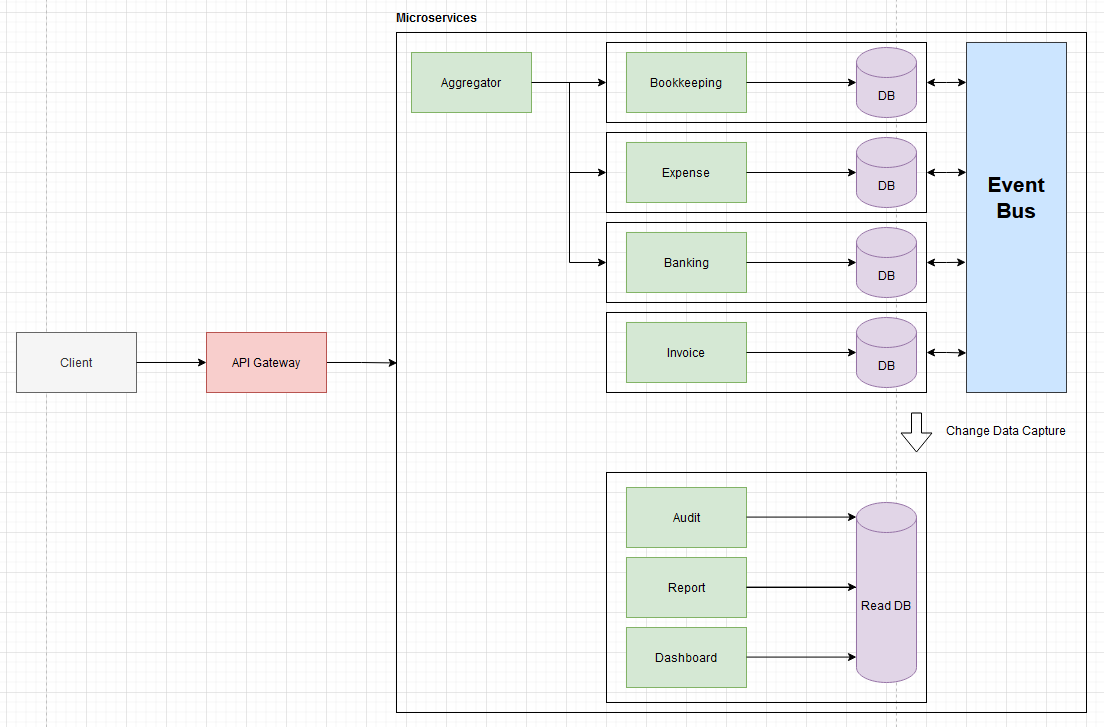

Architecture

How do we split the services?

Should we break the services into as few segments as possible or by bounce context? We risk having the services tightly coupled if we divide them too thinly. These services require service discovery and communication synchronous. We then have the worst of both world, which is called Distributed Monolith. Instead calling via functions, these services communicate by calling endpoints.

If the monolith is split by bouncing context, each service should be independent and simple for each team to deploy and develop.

My rule of thump is the services should operate independently of one another, and communication should be asynchronous.

Aggregator pattern

The app may occasionally need to use data from several services. The aggregator pattern could be used, in which the aggregator requests multiple services before aggregating the data.

Use RabitMQ for async communication

We could communicate between the services using a message queue to enable loose coupling. Applied outbox pattern for making sure the event is publish to message broker.

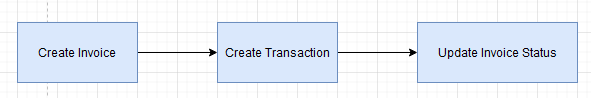

Handle eventual consistency

- Instead of ensuring that the system is in a consistent state all the time, we can accept that the system will be at some point in the future.

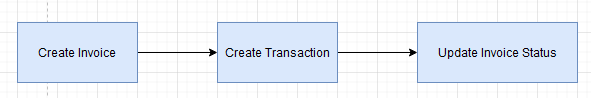

- When a transaction is created, the status of an invoice, for instance, will change from InProgress to Issued. We may have a batch procedure to restore the invoice to its original condition if the system failed to update the status of the invoice. The user can also update the invoice to a consistent state when saving it a second time, the procedure must be idempotent.

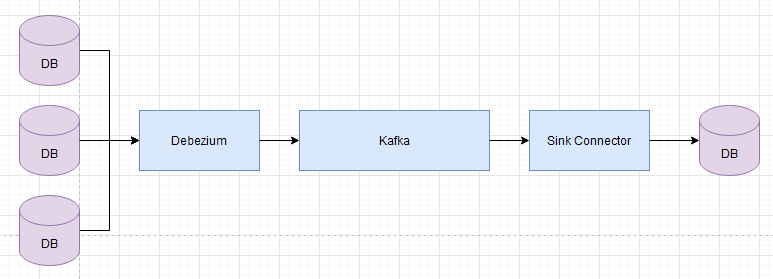

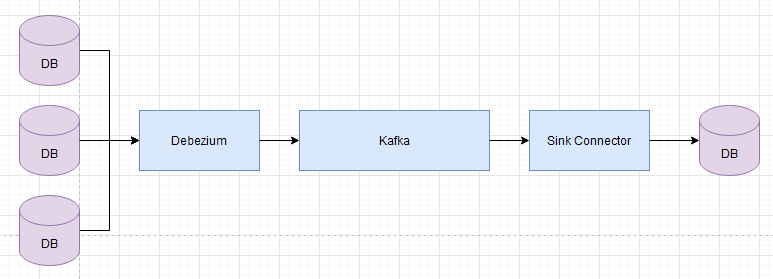

Use Debezium and Kafka for source and sink data

Source

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

{

"name": "invoice-invoice-connector",

"config": {

"connector.class": "io.debezium.connector.postgresql.PostgresConnector",

"tasks.max": "1",

"database.hostname": "postgres",

"database.port": "5432",

"database.user": "postgres",

"database.password": "postgres",

"database.dbname" : "Invoice_Invoice",

"topic.prefix": "invoice",

"schema.include.list": "public",

"slot.name" : "invoice"

}

}

|

Sink

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

{

"name": "invoice-invoices-sink",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSinkConnector",

"tasks.max": "1",

"topics": "invoice.public.invoices",

"connection.url": "jdbc:postgresql://host.docker.internal:5433/invoice_ms?user=postgres&password=postgres",

"transforms": "unwrap,date,due_date",

"transforms.unwrap.type": "io.debezium.transforms.ExtractNewRecordState",

"transforms.unwrap.drop.tombstones": "false",

"auto.create": "true",

"auto.evolve": "true",

"insert.mode": "upsert",

"delete.enabled": "true",

"pk.fields": "id",

"pk.mode": "record_key",

"table.name.format": "invoices",

"transforms.date.type": "org.apache.kafka.connect.transforms.TimestampConverter$Value",

"transforms.date.target.type": "Timestamp",

"transforms.date.field": "date",

"transforms.date.format": "yyyy-MM-dd'T'HH:mm:ss'Z'",

"transforms.due_date.type": "org.apache.kafka.connect.transforms.TimestampConverter$Value",

"transforms.due_date.target.type": "Timestamp",

"transforms.due_date.field": "due_date",

"transforms.due_date.format": "yyyy-MM-dd'T'HH:mm:ss'Z'"

}

}

|

HAProxy as API Gateway

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

|

global

maxconn 4096

daemon

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

lua-load /etc/haproxy/cors.lua

setenv APP_PATH localhost:5010

setenv BOOKKEEPING_API_S1_PATH host.docker.internal:5012

setenv BOOKKEEPING_API_S2_PATH host.docker.internal:5013

setenv INVOICE_API_S1_PATH host.docker.internal:5014

setenv EXPENSE_API_S1_PATH host.docker.internal:5015

setenv BANKING_API_S1_PATH host.docker.internal:5016

setenv AGGREGATOR_API_S1_PATH host.docker.internal:5017

setenv AUDIT_API_S1_PATH host.docker.internal:5018

setenv DASHBOARD_API_S1_PATH host.docker.internal:5019

setenv REPORT_API_S1_PATH host.docker.internal:5020

defaults

timeout connect 10s

timeout client 30s

timeout server 30s

log global

mode http

option httplog

maxconn 3000

stats enable

option forwardfor

option http-server-close

stats uri /haproxyStats

stats auth admin:AbcXyz123!!!

frontend api_gateway

bind *:80

http-request lua.cors "GET,PUT,POST,DELETE" "${APP_PATH}" "*"

http-response lua.cors

acl PATH_bookkeeping path_beg -i /api/journals

acl PATH_bookkeeping path_beg -i /api/accounts

acl PATH_bookkeeping path_beg -i /api/userWorkspaces

acl PATH_bookkeeping path_beg -i /api/transactions

acl PATH_invoice path_beg -i /api/products

acl PATH_invoice path_beg -i /api/customers

acl PATH_invoice path_beg -i /api/invoices

acl PATH_invoice path_beg -i /api/credits

acl PATH_invoice path_beg -i /api/invoicePayments

acl PATH_expense path_beg -i /api/vendors

acl PATH_expense path_beg -i /api/bills

acl PATH_expense path_beg -i /api/billPayments

acl PATH_expense path_beg -i /api/expensePayments

acl PATH_banking path_beg -i /api/bankTransactions

acl PATH_banking path_beg -i /api/bankReconciliations

acl PATH_aggregator path_beg -i /api/aggregators

acl PATH_audit path_beg -i /api/audits

acl PATH_dashboard path_beg -i /api/dashboards

acl PATH_report path_beg -i /api/reports

use_backend be_bookkeeping if PATH_bookkeeping

use_backend be_invoice if PATH_invoice

use_backend be_expense if PATH_expense

use_backend be_banking if PATH_banking

use_backend be_aggregator if PATH_aggregator

use_backend be_audit if PATH_audit

use_backend be_dashboard if PATH_dashboard

use_backend be_report if PATH_report

backend be_bookkeeping

server s1 "${BOOKKEEPING_API_S1_PATH}" check

server s2 "${BOOKKEEPING_API_S2_PATH}" check

backend be_invoice

server s1 "${INVOICE_API_S1_PATH}" check

backend be_expense

server s1 "${EXPENSE_API_S1_PATH}" check

backend be_banking

server s1 "${BANKING_API_S1_PATH}" check

backend be_aggregator

server s1 "${AGGREGATOR_API_S1_PATH}" check

backend be_audit

server s1 "${AUDIT_API_S1_PATH}" check

backend be_dashboard

server s1 "${DASHBOARD_API_S1_PATH}" check

backend be_report

server s1 "${REPORT_API_S1_PATH}" check

|